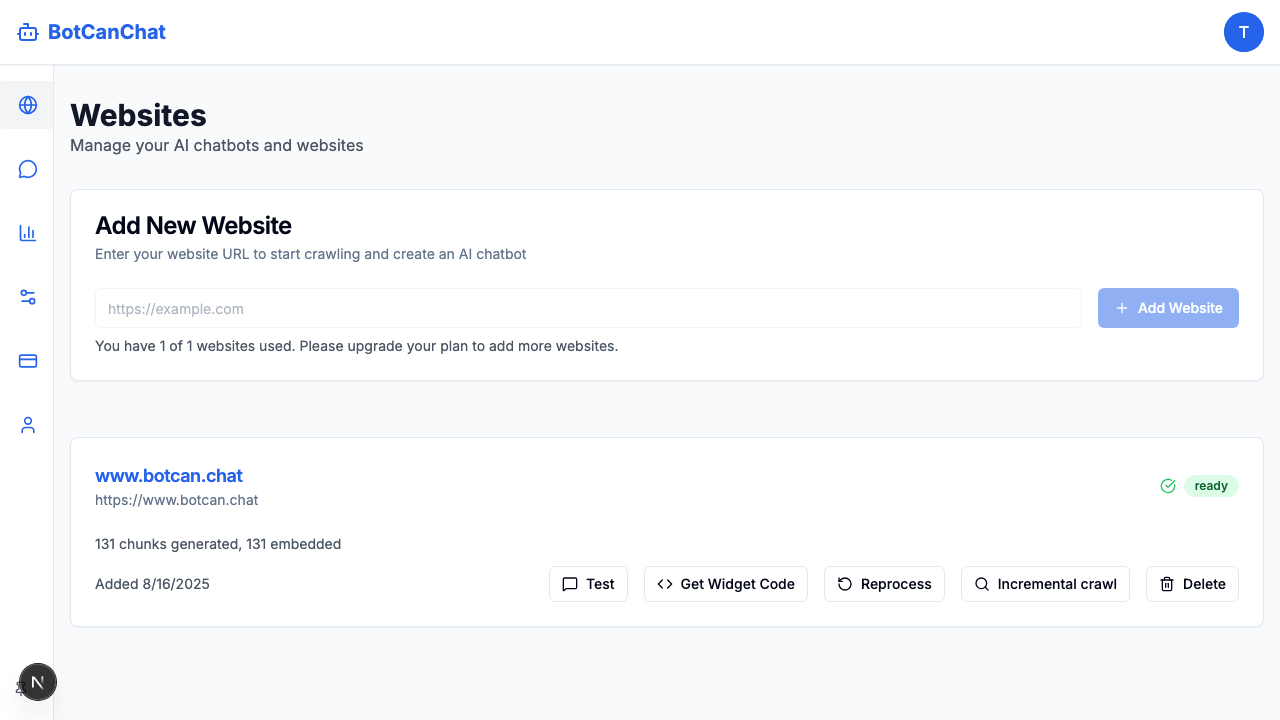

Understanding the Crawling Process

Learn how BubblaV's smart crawler analyzes your website content using advanced AI to build your chatbot's knowledge base. Understand each stage of the process and what to expect.

Crawling Process Overview

When you add a website, our smart crawler running on Railway.com infrastructure automatically begins analyzing your site using Playwright browser automation to extract and process content.

Smart Crawler Service

FastAPI service running on Railway.com with Playwright for JavaScript-heavy sites

Content Processing

Content chunked and stored in PostgreSQL with pgvector embeddings for AI responses

Website Status Flow

Initial Status: Ready

Website created and validated, ready for crawling to begin

Status: Crawling

Smart crawler analyzes site structure, extracts content, clears old data, and generates text chunks

Status: Embedding

Text chunks processed into vector embeddings using Fireworks.ai nomic-embed-text-v1.5 model

Status: Ready

Chatbot is trained and ready to answer questions about your website content

Timing Expectations

Small Websites

Under 50 pages, simple structure, mostly static content

Medium Websites

50-500 pages, some JavaScript, moderate complexity

Large Websites

500+ pages, complex JavaScript, e-commerce sites

Real-time Progress Monitoring

Dashboard Tracking

- • Website Status: Live updates via Supabase realtime

- • Chunk Progress: total_chunks_generated counter

- • Embedding Progress: chunks_embedded counter

- • Crawl Logs: Detailed activity logs in crawl_logs table

Background Services

- • Smart Crawler: https://smart-crawler-production.up.railway.app

- • Embedding Worker: Processes chunks in background

- • Janitor Service: Monitors stalled jobs every hour

- • Authentication: SERVICE_AUTH_TOKEN for security

When Things Go Wrong

Status: Failed

Common causes include:

- • Website not publicly accessible (returns non-200 HTTP status)

- • URL validation failed during validateUrl() check

- • Smart crawler service unavailable or timed out

- • Duplicate crawl job prevented (website already processing)

Recovery Options

- • Reprocess Button: Triggers new crawl via /api/crawl/secure

- • Status Reset: Clears existing content and starts fresh

- • Conflict Protection: Prevents multiple simultaneous crawls

- • Janitor Service: Automatically cleans up stalled jobs

Technical Architecture

Crawler Infrastructure

Data Processing

Next Steps

Once crawling is complete, your chatbot will be ready to deploy on your website.